Past JEE Main Entrance Papers

Overview

Random experiment

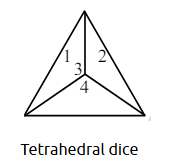

An experiment is random means that the experiment has more than one possible outcome and it is not possible to predict with certainty which outcome that will be. For instance, in an experiment of tossing an ordinary coin, it can be predicted with certainty that the coin will land either heads up or tails up, but it is not known for sure whether heads or tails will occur. If a die is thrown once, any of the six numbers, i.e., \(1,2,3,4,5,6\) may turn up, not sure which number will come up.

- Outcome: A possible result of a random experiment is called its outcome for example if the experiment consists of tossing a coin twice, some of the outcomes are \(HH , HT\) etc.

- Sample Space: A sample space is the set of all possible outcomes of an experiment. In fact, it is the universal set S pertinent to a given experiment.

The sample space for the experiment of tossing a coin twice is given by

\(

S =\{ HH , HT , TH , TT \}

\)

The sample space for the experiment of drawing a card out of a deck is the set of all cards in the deck.

Event

An event is a subset of a sample space S. For example, the event of drawing an ace from a deck is

\(A=\{\) Ace of Heart, Ace of Club, Ace of Diamond, Ace of Spade \(\}\)

Types of events

- Impossible and Sure Events: The empty set \(\phi\) and the sample space S describe events. In fact \(\phi\) is called an impossible event and \(S\), i.e., the whole sample space is called a sure event.

- Simple or Elementary Event: If an event E has only one sample point of a sample space, i.e., a single outcome of an experiment, it is called a simple or elementary event. The sample space of the experiment of tossing two coins is given by

\(

S =\{ HH , HT , TH , TT \}

\)

The event \(E_1=\{ HH \}\) containing a single outcome HH of the sample space S is a simple or elementary event. If one card is drawn from a well shuffled deck, any particular card drawn like ‘queen of Hearts’ is an elementary event. - Compound Event: If an event has more than one sample point it is called a compound event, for example, \(S =\{ HH , HT \}\) is a compound event.

- Equally Likely Events: A number of simple events are said to be equally likely if there is no reason for one event to occur in preference to any other event.

- Complementary event: Given an event A , the complement of A is the event consisting of all sample space outcomes that do not correspond to the occurrence of \(A\).

The complement of A is denoted by \(A ^{\prime}\) ‘ or \(\overline{ A }\) or \(A ^{ C }\). It is also called the event ‘not A ‘. Further \(P (\overline{ A })\) denotes the probability that A will not occur.

\(

A ^{\prime}=A ^{ C }=\overline{ A }= S – A =\{w: w \in S \text { and } w \notin A \}

\)

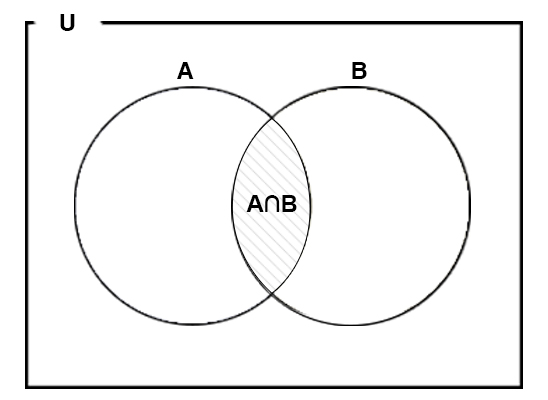

Event ‘ \(A\) or \(B\) ‘

If \(A\) and \(B\) are two events associated with same sample space, then the event ‘\(A\) or \(B\) ‘ is same as the event \(A \cup B\) and contains all those elements which are either in \(A\) or in \(B\) or in both. Further more, \(P ( A \cup B )\) denotes the probability that \(A\) or \(B\) (or both) will occur.

Event ‘ \(A\) and \(B\) ‘

If \(A\) and \(B\) are two events associated with a sample space, then the event ‘ \(A\) and \(B\) ‘ is same as the event \(A \cap B\) and contains all those elements which are common to both \(A\) and \(B\) . Further more, \(P ( A \cap B )\) denotes the probability that both \(A\) and \(B\) will simultaneously occur.

\(\text { The Event ‘A but not B’ (Difference A-B) }\)

An event \(A-B\) is the set of all those elements of the same space \(S\) which are in \(A\) but not in \(B\) , i.e., \(A – B = A \cap B ^{\prime}\).

Mutually exclusive event:

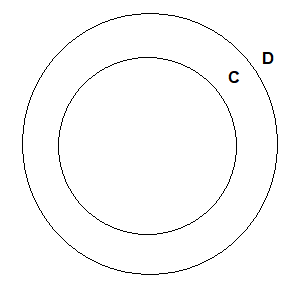

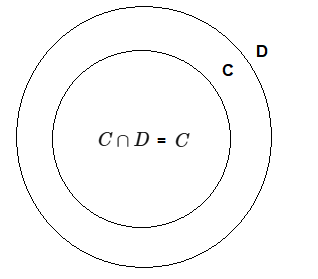

Two events \(A\) and \(B\) of a sample space S are mutually exclusive if the occurrence of any one of them excludes the occurrence of the other event. Hence, the two events \(A\) and \(B\) cannot occur simultaneously, and thus \(P ( A \cap B )=0\).

Remark

Simple or elementary events of a sample space are always mutually exclusive. For example, the elementary events \(\{1\},\{2\},\{3\},\{4\},\{5\}\) or \(\{6\}\) of the experiment of throwing a dice are mutually exclusive.

Consider the experiment of throwing a die once.

The events \(E =\) getting a even number and \(F =\) getting an odd number are mutually exclusive events because \(E \cap F =\phi\).

Note For a given sample space, there may be two or more mutually exclusive events.

Exhaustive events

If \(E _1, E _2, \ldots, E _n\) are \(n\) events of a sample space S and if

\(

E _1 \cup E _2 \cup E _3 \cup \ldots \cup E _n=\bigcup_{i=1}^n E _i= S

\)

then \(E _1, E _2, \ldots, E _n\) are called exhaustive events.

In other words, events \(E _1, E _2, \ldots, E _n\) of a sample space S are said to be exhaustive if atleast one of them necessarily occur whenever the experiment is performed.

Consider the example of rolling a die. We have \(S=\{1,2,3,4,5,6\}\). Define the two events.

\(A\): ‘a number less than or equal to 4 appears.’

\(B\) : ‘a number greater than or equal to 4 appears.’

Now

A: \(\{1,2,3,4\}, B=\{4,5,6\}\)

\(A \cup B=\{1,2,3,4,5,6\}=S\)

Such events \(A\) and \(B\) are called exhaustive events.

Mutually exclusive and exhaustive events

If \(E _1, E _2, \ldots, E _n\) are \(n\) events of a sample space S and if \(E _i \cap E _j=\phi\) for every \(i \neq j\), i.e., \(E _i\) and \(E _j\) are pairwise disjoint and \(\bigcup_{i=1}^n E _i= S\), then the events \(E _1, E _2, \ldots, E _n\) are called mutually exclusive and exhaustive events.

Consider the example of rolling a die.

We have \(\quad S=\{1,2,3,4,5,6\}\)

Let us define the three events as

\(A = \) a number which is a perfect square

\(B =\) a prime number

\(C = \) a number which is greater than or equal to 6

Now \(A=\{1,4\}, B=\{2,3,5\}, C=\{6\}\)

Note that \(A \cup B \cup C =\{1,2,3,4,5,6\}= S\). Therefore, \(A , B\) and \(C\) are exhaustive events.

Also \(A \cap B = B \cap C = C \cap A =\phi\)

Hence, the events are pairwise disjoint and thus mutually exclusive.

Classical approach is useful, when all the outcomes of the experiment are equally likely. We can use logic to assign probabilities. To understand the classical method consider the experiment of tossing a fair coin. Here, there are two equally likely outcomes – head \(( H )\) and tail \(( T )\). When the elementary outcomes are taken as equally likely, we have a uniform probablity model. If there are \(k\) elementary outcomes in \(S\) , each is assigned the probability of \(\frac{1}{k}\). Therefore, logic suggests that the probability of observing a head, denoted by \(P ( H )\), is \(\frac{1}{2}=0.5\), and that the probability of observing a tail,denoted \(P ( T )\), is also \(\frac{1}{2}=0.5\). Notice that each probability is between 0 and 1 , Further H and T are all the outcomes of the experiment and \(P ( H )+ P ( T )=1\).

Classical definition

If all of the outcomes of a sample space are equally likely, then the probability that an event will occur is equal to the ratio :

The number of outcomes favourable to the event

The total number of outcomes of the sample space

Suppose that an event \(E\) can happen in \(m\) ways out of a total of \(n\) possible equally likely ways.

Then the classical probability of occurrence of the event is denoted by

\(

P ( E )=\frac{m}{n}

\)

The probability of non occurrence of the event E is denoted by

\(

P (\text { not } E )=\frac{n-m}{n}=1-\frac{m}{n}=1- P ( E )

\)

Thus \(\quad P ( E )+ P (\) not \(E\) \()=1\)

The event ‘not \(E\) ‘ is denoted by \(\overline{ E }\) or \(E^{\prime}\) (complement of \(E\) )

Therefore \(P (\overline{ E })=1- P ( E )\)

Axiomatic approach to probability

- Let S be the sample space of a random experiment. The probability P is a real valued function whose domain is the power set of \(S\) , i.e., \(P ( S )\) and range is the interval \([0,1]\) i.e. \(P : P ( S ) \rightarrow[0,1]\) satisfying the following axioms.

For any event \(E , P ( E ) \geq 0\). - \(P ( S )=1\)

- If \(E\) and \(F\) are mutually exclusive events, then \(P ( E \cup F )= P ( E )+ P ( F )\).

It follows from (iii) that \(P (\phi)=0\).

Let \(S\) be a sample space containing elementary outcomes \(w_1, w_2, \ldots, w_n\), i.e., \(S =\left\{w_1, w_2, \ldots, w_n\right\}\)

It follows from the axiomatic definition of probability that

- \(0 \leq P \left(w_i\right) \leq 1\) for each \(w_i \in S\)

- \(P \left(w_i\right)+ P \left(w_2\right)+\ldots+ P \left(w_n\right)=1\)

- \(P ( A )= P \left(w_i\right)\) for any event A containing elementary events \(w_i\)

For example, if a fair coin is tossed once

\(P ( H )= P ( T )=\frac{1}{2}\) satisfies the three axioms of probability.

Now suppose the coin is not fair and has double the chances of falling heads up as compared to the tails, then \(P ( H )=\frac{2}{3}\) and \(P ( T )=\frac{1}{3}\).

This assignment of probabilities are also valid for \(H\) and \(T\) as these satisfy the axiomatic definitions.

Probabilities of equally likely outcomes

Let a sample space of an experiment be \(S =\left\{w_1, w_2, \ldots, w_n\right\}\) and suppose that all the outcomes are equally likely to occur i.e., the chance of occurrence of each simple event must be the same i.e., \(P \left(w_i\right)=p\) for all \(w_i \in S\), where \(0 \leq p \leq 1\)

Since

\(

\begin{aligned}

& \sum_{i=1}^n P\left(w_i\right)=1 \\

& p+p+p+\ldots+p(n \text { times })=1 \\

& \Rightarrow \quad n p=1, \text { i.e. } \quad p=\frac{1}{n} \\

&

\end{aligned}

\)

i.e.,

\(

\begin{aligned}

& p+p+p+\ldots+p(n \text { times })=1 \\

\Rightarrow \quad & n p=1, \quad \text { i.e. } \quad p=\frac{1}{n}

\end{aligned}

\)

Let S be the sample space and E be an event, such that \(n(S)=n\) and \(n( E )=m\). If each outcome is equally likely, then it follows that

\(

P ( E )=\frac{m}{n}=\frac{\text { Number of outcomes favourable to } E }{\text { Total number of possible outcomes }}

\)

Odds Against and Odds in Favour of an Event:

Let there be \(m + n\) equally likely, mutually exclusive and exhaustive cases out of which an event A can occur in m cases and does not occur in n cases. Then by definition, probability of occurrences of event \(A=P(A)=\frac{m}{m+n}\)

The probability of non-occurrence of event \(A=P\left(A^{\prime}\right)=\frac{n}{m+n}\) \(\therefore P ( A ): P \left( A ^{\prime}\right)= m : n\)

Thus the odd in favour of occurrences of the event A are defined by \(m : n\) i.e. \(P ( A ): P \left( A ^{\prime}\right)\); and the odds against the occurrence of the event \(A\) are defined by \(n\) : \(m\) i.e. \(P \left( A ^{\prime}\right)\) : \(P ( A )\).

Addition rule of probability

If \(A\) and \(B\) are any two events in a sample space \(S\) , then the probability that atleast one of the events \(A\) or \(B\) will occur is given by

\(

P ( A \cup B )= P ( A )+ P ( B )- P ( A \cap B )

\)

Similarly, for three events \(A\), \(B\) and \(C\), we have

\(

\begin{aligned}

& P ( A \cup B \cup C )= P ( A )+ P ( B )+ P ( C )- P ( A \cap B )- P ( A \cap C )- P ( B \cap C )+ \\

& P ( A \cap B \cap C )

\end{aligned}

\)

General form of addition theorem (Principle of Inclusion-Exclusion)

For \(n\) events \(A_1, A_2, A_3, \ldots . . . A_n\) in \(S\), we have

\(

P\left(A_1 \cup A_2 \cup A_3 \cup A_4 \ldots \ldots . . \cup A_n\right)

\)

\(

=\sum_{i=1}^{ n } P \left( A _i\right)-\sum_{i<j} P \left( A _i \cap A _j\right)+\sum_{i<i<k} P \left( A _i \cap A _j \cap A _k\right)+\ldots .+(-1)^{ n -1} P \left( A _1 \cap A _2 \cap A _3 \ldots \ldots \cap A _{ n }\right)

\)

Addition rule for mutually exclusive events

If \(A\) and \(B\) are disjoint sets, then

\(P ( A \cup B )= P ( A )+ P ( B ) \quad [\) since \(P ( A \cap B )= P (\phi)=0\), where A and B are disjoint \(]\).

The addition rule for mutually exclusive events can be extended to more than two events.

Conditional Probability

If \(A\) and \(B\) are any events in \(S\) then the conditional probability of \(B\) relative to \(A\), i.e. probability of occurence of \(B\) when \(A\) has occured, is given by

\(

P(B / A)=\frac{P(B \cap A)}{P(A)} \text {. If } P(A) \neq 0

\)

Bayes’ Theorem

It is a way of finding a probability when we know certain other probabilities.

The formula is:

\(

P(A \mid B)=\frac{P(A) P(B \mid A)}{P(B)}

\)

Which tells us: how often \(A\) happens given that \(B\) happens, written \(P ( A \mid B )\),

When we know: how often \(B\) happens given that \(A\) happens, written \(P ( B \mid A )\)

and how likely \(A\) is on its own, written \(P ( A )\)

and how likely B is on its own, written \(P ( B )\)

“A” With Three (or more) Cases

We just saw “\(A\)” with two cases ( \(A\) and not \(A\) ), which we took care of in the bottom line.

When “\(A\)” has 3 or more cases we include them all in the bottom line:

\(

P(A 1 \mid B)=\frac{P(A 1) P(B \mid A 1)}{P(A 1) P(B \mid A 1)+P(A 2) P(B \mid A 2)+P(A 3) P(B \mid A 3)+\ldots \text { etc }}

\)

Quiz Summary

0 of 190 Questions completed

Questions:

Information

You have already completed the quiz before. Hence you can not start it again.

Quiz is loading…

You must sign in or sign up to start the quiz.

You must first complete the following:

Results

Results

0 of 190 Questions answered correctly

Your time:

Time has elapsed

You have reached 0 of 0 point(s), (0)

Earned Point(s): 0 of 0, (0)

0 Essay(s) Pending (Possible Point(s): 0)

Categories

- Not categorized 0%

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- Current

- Review

- Answered

- Correct

- Incorrect

-

Question 1 of 190

1. Question

If an unbiased dice is rolled thrice, then the probability of getting a greater number in the \(i^{\text {th }}\) roll than the number obtained in the \((i-1)^{\text {th }}\) roll, \(i=2,3\), is equal to [JEE Main 2024 (Online) 9th April Evening Shift]

CorrectIncorrectHint

Let’s denote the outcomes of the three rolls as \(X_1, X_2\), and \(X_3\), where \(X_i\) represents the number obtained in the \(i^{\text {th }}\) roll. We are looking for the probability that:

\(

X_2>X_1 \text { and } X_3>X_2

\)

The total number of outcomes when rolling a dice three times is:

\(

6^3=216

\)

Let’s count the favorable outcomes. For each satisfying outcome, we must ensure that both inequalities are adhered to. Consider the possible sequences where every subsequent roll’s number is higher than the previous roll’s number. These sequences are:

\(

\begin{aligned}

& (1,2,3) \\

& (1,2,4) \\

& (1,2,5) \\

& (1,2,6) \\

& (1,3,4) \\

& (1,3,5) \\

& (1,3,6) \\

& (1,4,5) \\

& (1,4,6) \\

& (1,5,6) \\

& (2,3,4) \\

& (2,3,5) \\

& (2,3,6) \\

& (2,4,5) \\

& (2,4,6) \\

& (2,5,6) \\

& (3,4,5) \\

& (3,4,6) \\

& (3,5,6) \\

& (4,5,6)

\end{aligned}

\)

Clearly, there are 20 such favorable outcomes. Hence, the probability is given by:

Number of favorable outcomes

\(

\frac{\text { Number of favorable outcomes }}{\text { Total number of outcomes }}=\frac{20}{216}=\frac{5}{54}

\)Alternate:

Let \(x _1, x _2, x _3\) be the numbers on \(1^{\text {st }}, 2^{\text {nd }} \& 3^{\text {rd }}\) throw respectively.

Given: \(x _1< x _2< x _3\)

\(\therefore\) Favorable cases \(={ }^6 C _3\)

Total cases \(=6^3=216\)

Prob \(=\frac{{ }^6 C _3}{216} \Rightarrow \frac{20}{216}=\frac{5}{54}\) -

Question 2 of 190

2. Question

There are three bags \(X, Y\) and \(Z\). Bag \(X\) contains 5 one-rupee coins and 4 five-rupee coins; Bag \(Y\) contains 4 one-rupee coins and 5 five-rupee coins and Bag \(Z\) contains 3 one-rupee coins and 6 five-rupee coins. A bag is selected at random and a coin drawn from it at random is found to be a one-rupee coin. Then the probability, that it came from bag \(Y\), is : [JEE Main 2024 (Online) 8th April Evening Shift]

CorrectIncorrectHint

To solve this problem, we use Bayes’ theorem. Let’s define the events:

\(X\) : Selecting bag \(X\)

\(Y\) : Selecting bag \(Y\)

\(Z\) : Selecting bag \(Z\)

\(A\) : Drawing a one-rupee coin

We are given that a bag is selected at random, so the probabilities for choosing any of the bags are:

\(

P(X)=P(Y)=P(Z)=\frac{1}{3}

\)

Next, we need the probability of drawing a one-rupee coin from each bag:

From bag \(X: P(A \mid X)=\frac{5}{5+4}=\frac{5}{9}\)

From bag \(Y: P(A \mid Y)=\frac{4}{4+5}=\frac{4}{9}\)

From bag \(Z: P(A \mid Z)=\frac{3}{3+6}=\frac{3}{9}=\frac{1}{3}\)

We need to find the probability that the coin came from bag \(Y\) given that a one-rupee coin was drawn, i.e., we need \(P(Y \mid A)\). Using Bayes’ theorem:

\(

P(Y \mid A)=\frac{P(A \mid Y) \cdot P(Y)}{P(A)}

\)

To find \(P(A)\), the total probability of drawing a one-rupee coin can be calculated as follows:

\(

P(A)=P(A \mid X) \cdot P(X)+P(A \mid Y) \cdot P(Y)+P(A \mid Z) \cdot P(Z)

\)

Substituting the values:

\(

P(A)=\left(\frac{5}{9} \cdot \frac{1}{3}\right)+\left(\frac{4}{9} \cdot \frac{1}{3}\right)+\left(\frac{1}{3} \cdot \frac{1}{3}\right)

\)

Calculating the above, we get:

\(

P(A)=\frac{5}{27}+\frac{4}{27}+\frac{1}{9}

\)

Note that \(\frac{1}{9}=\frac{3}{27}\), so:

\(

P(A)=\frac{5}{27}+\frac{4}{27}+\frac{3}{27}=\frac{12}{27}=\frac{4}{9}

\)

Now, substituting back into Bayes’ theorem:

\(

P(Y \mid A)=\frac{\left(\frac{4}{9}\right) \cdot\left(\frac{1}{3}\right)}{\frac{4}{9}}=\frac{4}{9} \cdot \frac{1}{3} \cdot \frac{9}{4}=\frac{1}{3}

\) -

Question 3 of 190

3. Question

Let the sum of two positive integers be 24 . If the probability, that their product is not less than \(\frac{3}{4}\) times their greatest possible product, is \(\frac{m}{n}\), where \(\operatorname{gcd}(m, n)=1\), then \(n\) \(-m\) equals [JEE Main 2024 (Online) 8th April Morning Shift]

CorrectIncorrectHint

Take two numbers as \(a\) and \(b\)

\(

a+b=24

\)

For product to be maximum

\(

\begin{aligned}

\frac{a+b}{2} & \geq \sqrt{a b} \\

144 & >a b

\end{aligned}

\)

Maximum product is 144

Now, \(a b \geq \frac{3}{4} \cdot 144=108\)

Sample space \(=\{(23,1),(22,2), \ldots\}\)

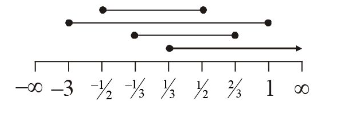

Integer points on line in shaded region

\(

\begin{aligned}

& \{(6,18),(7,17),(8,16), \ldots(18,6)\} \\

& P(E)=\frac{n(E)}{n(S)}=\frac{13}{23}=\frac{m}{n} \Rightarrow n-m=10

\end{aligned}

\) -

Question 4 of 190

4. Question

If three letters can be posted to any one of the 5 different addresses, then the probability that the three letters are posted to exactly two addresses is : [JEE Main 2024 (Online) 6th April Evening Shift]

CorrectIncorrectHint

We have 3 letters and 5 addresses, where 3 letters are posted to exactly 2 addresses. First, we will select 2 addresses in \({ }^5 C_2\) ways.

Now, 3 letters can be posted to exactly 2 addresses in 6 ways.

\(

\begin{aligned}

\therefore \text { Probability }=\frac{{ }^5 C_2 \times 6}{5^3} & \\

& =\frac{60}{125}=\frac{12}{25}

\end{aligned}

\)Alternate:

\(

\begin{aligned}

& \text { Total method }=5^3 \\

& \text { Favorable }={ }^5 C _2\left(2^3-2\right)=60 \\

& \text { Probability }=\frac{60}{125}=\frac{12}{25}

\end{aligned}

\) -

Question 5 of 190

5. Question

A company has two plants \(A\) and \(B\) to manufacture motorcycles. \(60 \%\) motorcycles are manufactured at plant \(A\) and the remaining are manufactured at plant \(B .80 \%\) of the motorcycles manufactured at plant \(A\) are rated of the standard quality, while \(90 \%\) of the motorcycles manufactured at plant \(B\) are rated of the standard quality. A motorcycle picked up randomly from the total production is found to be of the standard quality. If \(p\) is the probability that it was manufactured at plant \(B\), then \(126 p\) is [JEE Main 2024 (Online) 6th April Morning Shift]

CorrectIncorrectHint

\(

\begin{aligned}

&\begin{aligned}

& P(\text { standard automobile from } A)=\frac{6}{10} \times \frac{8}{10}=\frac{12}{25} \\

& P(\text { standard automobile from } B)=\frac{4}{10} \times \frac{9}{10}=\frac{9}{25} \\

& \text { Required Probability } \frac{\frac{9}{25}}{\frac{12}{25}+\frac{9}{25}} \\

& P=\frac{9}{21}=\frac{3}{7}

\end{aligned}\\

&\text { So, } 126 P=126 \times \frac{3}{7}=54

\end{aligned}

\)ALTERNATE:

\(

\begin{array}{|l|c|c|}

\hline & \text { A } & \text { B } \\

\hline \text { Manufactured } & 60 \% & 40 \% \\

\hline \text { Standard quality } & 80 \% & 90 \% \\

\hline

\end{array}

\)

\(P (\) Manufactured at \(B /\) found standard quality \()=\) ?

A: Found S.Q

B : Manufacture B

C: Manufacture A

\(

\begin{aligned}

& P\left(E_1\right)=\frac{40}{100} \\

& P \left( E _2\right)=\frac{60}{100} \\

& P \left( A / E _1\right)=\frac{90}{100} \\

& P \left( A / E _2\right)=\frac{80}{100}

\end{aligned}

\)

\(

\begin{aligned}

& \because P \left( E _1 / A \right)=\frac{ P \left( A / E _1\right) P \left( E _1\right)}{ P \left( A / E _1\right) P \left( E _1\right)+ P \left( A / E _2\right) P \left( E _2\right)}=\frac{3}{7} \\

& \therefore 126 P =54

\end{aligned}

\) -

Question 6 of 190

6. Question

The coefficients \(a , b , c\) in the quadratic equation \(a x^2+ bx + c =0\) are from the set \(\{1,2,3,4,5,6\}\). If the probability of this equation having one real root bigger than the other is \(p\), then 216 p equals : [JEE Main 2024 (Online) 5th April Evening Shift]

CorrectIncorrectHint

Equation is \(a x^2+b x+c=0\)

D \(>0\) [for roots to be real & distinct]

\(

\Rightarrow b^2-4 a c>0

\)

For \(b<2\) no value of \(a \& c\) are possible

For \(b=3 \Rightarrow a c<\frac{9}{4}\)

\((a, c) \in\{(1,1),(1,2),(2,1)\} \Rightarrow 3\) cases

For \(b=4 \Rightarrow a c<4\)

\(

(a, c) \in\{(1,1),(1,2),(2,1),(3,1),(1,3)\} \Rightarrow 5 \text { cases }

\)

For \(b=5 \Rightarrow a c<\frac{25}{4}\)

\(

\begin{aligned}

& (a, c) \in\{(1,1),(1,2),(2,1),(3,1),(1,3),(2,2) \\

& (4,1),(1,4),(3,2),(2,3),(5,1),(1,5),(1,6) \\

& (6,1)\}=14 \text { cases }

\end{aligned}

\)

For \(b=6 \Rightarrow a c<9\)

\((a, c) \in\{(1,1),(1,2),(2,1),(3,1),(1,3),(2,2)\),

\((4,1),(1,4),(3,2),(2,3),(5,1),(1,5),(1,6)\),

\((6,1),(2,4),(4,2)\}=16\) cases

Total cases \(=3+5+14+16=38\) cases

\(\Rightarrow\) Probability, \(p =\frac{38}{216}\)

\(

\Rightarrow 216 p=38

\) -

Question 7 of 190

7. Question

The coefficients \(a, b, c\) in the quadratic equation \(a x^2+b x+c=0\) are chosen from the set \(\{1,2,3,4,5,6,7,8\}\). The probability of this equation having repeated roots is : [JEE Main 2024 (Online) 5th April Morning Shift]

CorrectIncorrectHint

Given quadratic equation is

\(

a x^2+b x+c=0 \text { where } a, b, c \in\{1,2,3, \ldots, 8\}

\)

For repeated roots,

\(

\begin{array}{r}

b^2-4 a c=0 \\

\Rightarrow b^2=4 a c

\end{array}

\)

\(\Rightarrow a c\) must be a perfect square

\(

(a, c) \in\{(1,1),(1,4),(2,2),(2,8),(3,3),(4,1),(4,4),(5,5),(6,6),(7,7),(8,2),(8,8)\}

\)

Corresponding \(b\) must lie in set \(\{1,2,3, \ldots 8\}\)

\(

\begin{aligned}

& (a, b, c) \in\{(1,2,1),(1,2,4),(2,4,2),(2,8,8) \\

& (3,6,3),(4,4,1),(4,8,4),(8,8,2)\} \\

& \therefore \text { probability }=\frac{8}{8^3} \\

& =\frac{1}{64}

\end{aligned}

\) -

Question 8 of 190

8. Question

If the mean of the following probability distribution of a random variable X :

\(

\begin{array}{|c|c|c|c|c|c|}

\hline X & 0 & 2 & 4 & 6 & 8 \\

\hline P ( X ) & a & 2 a & a+b & 2 b & 3 b \\

\hline

\end{array}

\)

is \(\frac{46}{9}\), then the variance of the distribution is [JEE Main 2024 (Online) 4th April Evening Shift]CorrectIncorrectHint

\(

\begin{array}{|c|c|c|c|c|c|}

\hline X & 0 & 2 & 4 & 6 & 8 \\

\hline P(X) & a & 2 a & a+b & 2 b & 3 b \\

\hline

\end{array}

\)

\(

\begin{aligned}

& \text { Mean }=\sum x_i P\left(x_i\right) \\

& \frac{46}{9}=4 a+4 a+4 b+12 b+24 b \\

& \frac{46}{9}=8 a+40 b \\

& \frac{23}{9}=4 a+20 b \\

& 36 a+180 b=23 \dots(1)

\end{aligned}

\)

Sum of probability is 1

\(

\Rightarrow 4 a+6 b=1 \dots(2)

\)

Solving (1) and (2)

\(

\begin{aligned}

a & =\frac{1}{12}, b=\frac{1}{9} \\

\sigma^2 & =\sum x_i^2 P\left(x_i\right)-\left(\sum x_i P\left(x_i\right)\right)^2 \\

& =4 \times 2 a+16(a+b)+36(2 b)+64(3 b)-\left(\frac{46}{9}\right)^2 \\

& =8(a+2(a+b)+9 b+24 b)-\left(\frac{46}{9}\right)^2 \\

& =8(3 a+35 b)-\left(\frac{46}{9}\right)^2 \\

& =8\left(\frac{3}{12}+\frac{35}{9}\right)-\left(\frac{46}{9}\right)^2 \\

& =8\left(\frac{149}{36}\right)-\left(\frac{46}{9}\right)^2=\frac{566}{81}

\end{aligned}

\) -

Question 9 of 190

9. Question

Three urns A, B and C contain 7 red, 5 black; 5 red, 7 black and 6 red, 6 black balls, respectively. One of the urn is selected at random and a ball is drawn from it. If the ball drawn is black, then the probability that it is drawn from urn A is : [JEE Main 2024 (Online) 4th April Morning Shift]

CorrectIncorrectHint

Let’s denote the events as follows:

Let \(A_1, A_2\), and \(A_3\) be the events that urns \(A , B\), and C are chosen, respectively.

Let \(B\) be the event that a black ball is drawn.

We need to find the probability that the chosen urn is A given that a black ball is drawn, which is \(P\left(A_1 \mid B\right)\).Using Bayes’ theorem, we have :

\(

P\left(A_1 \mid B\right)=\frac{P\left(B \mid A_1\right) P\left(A_1\right)}{P(B)}

\)

First, we calculate each term individually :

1. The probability of choosing any urn, since they are chosen at random :

\(

P\left(A_1\right)=P\left(A_2\right)=P\left(A_3\right)=\frac{1}{3}

\)

2. The probability of drawing a black ball from each urn :

Urn A: \(P\left(B \mid A_1\right)=\frac{5}{12}\)

Urn B: \(P\left(B \mid A_2\right)=\frac{7}{12}\)

Urn C: \(P\left(B \mid A_3\right)=\frac{6}{12}=\frac{1}{2}\)

3. The total probability of drawing a black ball, \(P(B)\) :

\(

\begin{aligned}

& P(B)=P\left(B \mid A_1\right) P\left(A_1\right)+P\left(B \mid A_2\right) P\left(A_2\right)+P\left(B \mid A_3\right) P\left(A_3\right) \\

& P(B)=\frac{5}{12} \cdot \frac{1}{3}+\frac{7}{12} \cdot \frac{1}{3}+\frac{1}{2} \cdot \frac{1}{3} \\

& P(B)=\frac{5}{36}+\frac{7}{36}+\frac{6}{36} \\

& P(B)=\frac{18}{36}=\frac{1}{2}

\end{aligned}

\)

Now, substitute these into Bayes’ theorem :

\(

\begin{aligned}

& P\left(A_1 \mid B\right)=\frac{P\left(B \mid A_1\right) P\left(A_1\right)}{P(B)} \\

& P\left(A_1 \mid B\right)=\frac{\frac{5}{12} \cdot \frac{1}{3}}{\frac{1}{2}} \\

& P\left(A_1 \mid B\right)=\frac{\frac{5}{36}}{\frac{1}{2}} \\

& P\left(A_1 \mid B\right)=\frac{5}{18}

\end{aligned}

\) -

Question 10 of 190

10. Question

Let Ajay will not appear in JEE exam with probability \(p =\frac{2}{7}\), while both Ajay and Vijay will appear in the exam with probability \(q =\frac{1}{5}\). Then the probability, that Ajay will appear in the exam and Vijay will not appear is : [JEE Main 2024 (Online) 1st February Evening Shift]

CorrectIncorrectHint

We are given that the probability of Ajay not appearing in the JEE exam is \(p=\frac{2}{7}\), and the probability that both Ajay and Vijay will appear in the exam is \(q=\frac{1}{5}\).

We are asked to find the probability that Ajay will appear in the exam and Vijay will not. Let’s denote this probability as r .

To find \(r\), we need to use the concept of complementary events. The probability that Ajay will appear in the exam is the complement of the probability that he will not appear. So,

\(

P(\text { Ajay appears })=1-P(\text { Ajay does not appear })=1- p =1-\frac{2}{7}=\frac{5}{7} \text {. }

\)

The event that both Ajay and Vijay appear in the exam is independent of the event that only Ajay appears (and Vijay does not). Therefore, we can express the probability that only Ajay will appear (and Vijay will not) as the difference of Ajay appearing minus both Ajay and Vijay appearing, because the probability of both appearing (q) is included in the probability of Ajay appearing:

\(

r =P(\text { Ajay appears })-P(\text { Both Ajay and Vijay appear })=\frac{5}{7}-\frac{1}{5} \text {. }

\)

To subtract these two fractions, we need a common denominator, which would be 35 in this case. So,

\(

r=\frac{5}{7} \cdot \frac{5}{5}-\frac{1}{5} \cdot \frac{7}{7}=\frac{25}{35}-\frac{7}{35}=\frac{25-7}{35}=\frac{18}{35}

\)Alternate:

\(

\begin{aligned}

& P (\overline{ A })=\frac{2}{7}= p \\

& P ( A \cap V )=\frac{1}{5}= q \\

& P ( A )=\frac{5}{7} \\

& P ( A \cap \overline{ V })=\frac{18}{35}

\end{aligned}

\) -

Question 11 of 190

11. Question

A bag contains 8 balls, whose colours are either white or black. 4 balls are drawn at random without replacement and it was found that 2 balls are white and other 2 balls are black. The probability that the bag contains equal number of white and black balls is : [JEE Main 2024 (Online) 1st February Morning Shift]

CorrectIncorrectHint

\(n(s)=\) there are 5 possible sample space.

\(

P\left(\frac{A_1}{E}\right)=\frac{P\left(A_1\right) P\left(\frac{E}{A_1}\right)}{P\left(A_1\right) P\left(\frac{E}{A_1}\right)+P\left(A_2\right) P\left(\frac{E}{A_2}\right)+\ldots \ldots}

\)

\(

\begin{aligned}

& P (4 W 4 B / 2 W 2 B)= \\

& \frac{P(4 W 4 B) \times P(2 W 2 B / 4 W 4 B)}{P(2 W 6 B) \times P(2 W 2 B / 2 W 6 B)+P(3 W 5 B) \times P(2 W 2 B / 3 W 5 B)+\ldots \ldots \ldots \ldots+P(6 W 2 B) \times P(2 W 2 B / 6 W 2 B)}

\end{aligned}

\)

\(

\begin{aligned}

& =\frac{\frac{1}{5} \times \frac{{ }^4 C _2 \times{ }^4 C _2}{{ }^8 C _4}}{\frac{1}{5} \times \frac{{ }^2 C _2 \times{ }^6 C _2}{{ }^8 C _4}+\frac{1}{5} \times \frac{{ }^3 C _2 \times{ }^5 C _2}{{ }^8 C _4}+\ldots+\frac{1}{5} \times \frac{{ }^6 C _2 \times{ }^2 C _2}{{ }^8 C _4}} \\

& =\frac{2}{7}

\end{aligned}

\) -

Question 12 of 190

12. Question

A coin is biased so that a head is twice as likely to occur as a tail. If the coin is tossed 3 times, then the probability of getting two tails and one head is [JEE Main 2024 (Online) 31st January Evening Shift]

CorrectIncorrectHint

To solve this problem, we need to first determine the probability of getting a head \(( H )\) and the probability of getting a tail \(( T )\).

Since a head is twice as likely to occur as a tail, we can denote the probability of getting a tail as \(P(T)=p\) and the probability of getting a head as \(P(H)=2 p\).

These probabilities must sum to 1 because those are the only two possible outcomes for each coin toss :

\(

\begin{aligned}

& P(H)+P(T)=1 \\

& 2 p+p=1 \\

& 3 p=1 \\

& p=\frac{1}{3}

\end{aligned}

\)

Therefore, the probability of getting a tail \(( T )\) is \(P(T)=\frac{1}{3}\) and the probability of getting a head \(( H )\) is \(P(H)=2 \times \frac{1}{3}=\frac{2}{3}\).

Now to find the probability of getting two tails and one head, we need to consider the different sequences in which this can occur. There are three unique sequences: TTH, THT, and HTT.

The probability of each sequence is found by multiplying the probabilities of each individual event since each coin toss is independent:

\(

\begin{aligned}

& P(T T H)=P(T) \times P(T) \times P(H)=\left(\frac{1}{3}\right)^2 \times \frac{2}{3}=\frac{1}{9} \times \frac{2}{3}=\frac{2}{27} \\

& P(T H T)=P(T) \times P(H) \times P(T)=\frac{1}{3} \times \frac{2}{3} \times \frac{1}{3}=\frac{2}{27} \\

& P(H T T)=P(H) \times P(T) \times P(T)=\frac{2}{3} \times\left(\frac{1}{3}\right)^2=\frac{2}{27}

\end{aligned}

\)

The overall probability of getting two tails and one head in any order is the sum of these individual probabilities :

\(

P(2 T 1 H)=P(T T H)+P(T H T)+P(H T T)=\frac{2}{27}+\frac{2}{27}+\frac{2}{27}=\frac{6}{27}

\)

Simplifying this expression gives us: \(P(2 T 1 H)=\frac{6}{27}=\frac{2}{9}\)Alternate:

Let probability of tail is \(\frac{1}{3}\)

\(\Rightarrow\) Probability of getting head \(=\frac{2}{3}\)

\(\therefore\) Probability of getting 2 tails and 1 head

\(

\begin{aligned}

& =\left(\frac{1}{3} \times \frac{2}{3} \times \frac{1}{3}\right) \times 3 \\

& =\frac{2}{27} \times 3 \\

& =\frac{2}{9}

\end{aligned}

\) -

Question 13 of 190

13. Question

Three rotten apples are accidently mixed with fifteen good apples. Assuming the random variable \(x\) to be the number of rotten apples in a draw of two apples, the variance of \(x\) is [JEE Main 2024 (Online) 31st January Morning Shift]

CorrectIncorrectHint

3 bad apples, 15 good apples.

Let X be no of bad apples

Then \(P ( X =0)=\frac{{ }^{15} C _2}{{ }^{18} C _2}=\frac{105}{153}\)

\(P ( X =1)=\frac{{ }^3 C _1 \times{ }^{15} C _1}{{ }^{18} C _2}=\frac{45}{153}\)

\(P ( X =2)=\frac{{ }^3 C _2}{{ }^{18} C _2}=\frac{3}{153}\)

\(E ( X )=0 \times \frac{105}{153}+1 \times \frac{45}{153}+2 \times \frac{3}{153}=\frac{51}{153}\)

\(=\frac{1}{3}\)

\(\operatorname{Var}( X )= E \left( X ^2\right)-( E ( X ))^2\)

\(=0 \times \frac{105}{153}+1 \times \frac{45}{153}+4 \times \frac{3}{153}-\left(\frac{1}{3}\right)^2\)

\(=\frac{57}{153}-\frac{1}{9}=\frac{40}{153}\) -

Question 14 of 190

14. Question

Two marbles are drawn in succession from a box containing 10 red, 30 white, 20 blue and 15 orange marbles, with replacement being made after each drawing. Then the probability, that first drawn marble is red and second drawn marble is white, is [JEE Main 2024 (Online) 31st January Morning Shift]

CorrectIncorrectHint

Probability of drawing first red and then white

\(

\begin{aligned}

& =\frac{10}{75} \times \frac{30}{75} \\

& =\frac{4}{75}

\end{aligned}

\)Alternate:

To solve this problem, we need to calculate the probability of two independent events occurring in succession: the first marble drawn is red, and the second marble drawn is white. Since the drawing is with replacement, the number of marbles of each color remains the same for both draws.

The total number of marbles in the box is the sum of red, white, blue, and orange marbles:

\(

\text { Total } \text { marbles }=10(\text { red })+30(\text { white })+20(\text { blue })+15(\text { orange })=75 .

\)

The probability of drawing a red marble in the first draw is the number of red marbles divided by the total number of marbles:

\(

P(\text { First is red })=\frac{10}{75} \text {. }

\)

Since the marble is replaced, the probability of drawing a white marble in the second draw remains as the number of white marbles divided by the total number of marbles:

\(

P(\text { Second is white })=\frac{30}{75} \text {. }

\)

The probability of both independent events occurring in succession (drawing a red marble first and then a white marble) is the product of their individual probabilities:

\(

P(\text { First is red and second is white })=P(\text { First is red }) \times P(\text { Second is white })=\frac{10}{75} \times \frac{30}{75}

\)

Now, let’s calculate this probability:

\(

P(\text { First is red and second is white })=\frac{10 \times 30}{75 \times 75}=\frac{300}{5625}=\frac{4}{75} \text {. }

\) -

Question 15 of 190

15. Question

Bag A contains 3 white, 7 red balls and Bag B contains 3 white, 2 red balls. One bag is selected at random and a ball is drawn from it. The probability of drawing the ball from the bag A , if the ball drawn is white, is [JEE Main 2024 (Online) 30th January Evening Shift]

CorrectIncorrectHint

\(E _1: A\) is selected

\(E _2: B\) is selected

\(E :\) white ball is drawn

\(

\begin{aligned}

& P \left( E _1 / E \right)= \\

& \frac{P(E) \cdot P\left(E / E_1\right)}{P\left(E_1\right) \cdot P\left(E / E_1\right)+P\left(E_2\right) \cdot P\left(E / E_2\right)}=\frac{\frac{1}{2} \times \frac{3}{10}}{\frac{1}{2} \times \frac{3}{10}+\frac{1}{2} \times \frac{3}{5}} \\

& =\frac{3}{3+6}=\frac{1}{3}

\end{aligned}

\) -

Question 16 of 190

16. Question

Two integers \(x\) and \(y\) are chosen with replacement from the set \(\{0,1,2,3, \ldots, 10\}\). Then the probability that \(|x-y|>5\), is : [JEE Main 2024 (Online) 30th January Morning Shift]

CorrectIncorrectHint

If \(x=0, y=6,7,8,9,10\)

If \(x=1, y=7,8,9,10\)

If \(x=2, y=8,9,10\)

If \(x=3, y=9,10\)

If \(x=4, y=10\)

If \(x=5, y=\) no possible value

Total possible ways \(=(5+4+3+2+1) \times 2\)

\(=30\)

Required probability \(=\frac{30}{11 \times 11}=\frac{30}{121}\) -

Question 17 of 190

17. Question

An integer is chosen at random from the integers \(1,2,3, \ldots, 50\). The probability that the chosen integer is a multiple of at least one of 4,6 and 7 is [JEE Main 2024 (Online) 29th January Evening Shift]

CorrectIncorrectHint

Given set \(=\{1,2,3, \ldots \ldots . .50\}\)

\(P ( A )=\) Probability that number is multiple of 4

\(P ( B )=\) Probability that number is multiple of 6

\(P ( C )=\) Probability that number is multiple of 7

Now,

\(

P ( A )=\frac{12}{50}, P ( B )=\frac{8}{50}, P ( C )=\frac{7}{50}

\)

again

\(

\begin{aligned}

& P ( A \cap B )=\frac{4}{50}, P ( B \cap C )=\frac{1}{50}, P ( A \cap C )=\frac{1}{50} \\

& P ( A \cap B \cap C )=0

\end{aligned}

\)

Thus

\(

\begin{aligned}

& P(A \cup B \cup C)=\frac{12}{50}+\frac{8}{50}+\frac{7}{50}-\frac{4}{50}-\frac{1}{50}-\frac{1}{50}+0 \\

& \quad=\frac{21}{50}

\end{aligned}

\) -

Question 18 of 190

18. Question

A fair die is thrown until 2 appears. Then the probability, that 2 appears in even number of throws, is [JEE Main 2024 (Online) 29th January Morning Shift]

CorrectIncorrectHint

Required probability \(=\)

\(

\begin{aligned}

& \frac{5}{6} \times \frac{1}{6}+\left(\frac{5}{6}\right)^3 \times \frac{1}{6}+\left(\frac{5}{6}\right)^5 \times \frac{1}{6}+\ldots \\

& =\frac{1}{6} \times \frac{\frac{5}{6}}{1-\frac{25}{36}}=\frac{5}{11}

\end{aligned}

\)Alternate:

The probability that a fair die will land on 2 in an even number of throws can be understood by considering the die’s first roll and subsequent pairs of rolls. The chance of not rolling a 2 on the first roll is \(5 / 6\), and the probability of not rolling a 2 on two consecutive rolls is \((5 / 6)^2\). We want to find the sum of probabilities that 2 appears for the first time on the second, fourth, sixth, etc., roll. The probability of 2 appearing on the second roll is \((5 / 6) \times(1 / 6)\). To find the total probability for all even rolls, we use the geometric series formula for infinite series.

Total probability for even rolls: \((5 / 6) \times(1 / 6) /\left(1-(5 / 6)^2\right)=(5 / 6) \times(1 / 6) /(11 / 36)=\) 5/11 -

Question 19 of 190

19. Question

An urn contains 6 white and 9 black balls. Two successive draws of 4 balls are made without replacement. The probability, that the first draw gives all white balls and the second draw gives all black balls, is : [JEE Main 2024 (Online) 27th January Evening Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& P=\frac{{ }^6 C _4}{{ }^{15} C _4} \cdot \frac{{ }^9 C _4}{{ }^{11} C _4} \\

& =\frac{15 \times 24}{15 \cdot 14 \cdot 13 \cdot 12} \times \frac{9 \cdot 8 \cdot 7 \cdot 6 \times 24}{24 \cdot 11 \cdot 10 \cdot 9 \cdot 8} \\

& =\frac{1}{13 \cdot 7} \times \frac{7 \cdot 6}{10 \cdot 11} \\

& =\frac{6}{13 \cdot 10 \cdot 11} \\

& =\frac{3}{13 \cdot 5 \cdot 11} \\

& =\frac{3}{715}

\end{aligned}

\) -

Question 20 of 190

20. Question

A bag contains 6 white and 4 black balls. A die is rolled once and the number of balls equal to the number obtained on the die are drawn from the bag at random. The probability that all the balls drawn are white is : [JEE Main 2023 (Online) 15th April Morning Shift]

CorrectIncorrectHint

\(

\begin{array}{|ll|}

\hline 6 & W \\

4 & R \\

\hline

\end{array}

\)

\(

\begin{aligned}

& \frac{1}{6} \times\left[\frac{{ }^6 C _1}{{ }^{10} C _1}+\frac{{ }^6 C _2}{{ }^{10} C _2}+\frac{{ }^6 C _3}{{ }^{10} C _3}+\frac{{ }^6 C _4}{{ }^{10} C _4}+\frac{{ }^6 C _5}{{ }^{10} C _5}+\frac{{ }^6 C _6}{{ }^{10} C _6}\right] \\

& =\frac{1}{6}\left(\frac{126+70+35+15+5+1}{210}\right)=\frac{42}{210}=\frac{1}{5} \\

&

\end{aligned}

\)Alternate:

Let \(X\) be the number rolled on the die, and let \(W\) be the event that all balls drawn are white. We want to find the probability \(P(W)\), which can be calculated using the law of total probability as follows :

\(

P(W)=\sum_{x=1}^6 P(W \mid X=x) P(X=x)

\)

The probability of rolling any number from 1 to 6 on the die is equal, so \(P(X=x)=\frac{1}{6}\) for all \(x \in\{1,2,3,4,5,6\}\).

Now let’s calculate the conditional probabilities \(P(W \mid X=x)\) for each possible value of \(x\) :

1. \(P(W \mid X=1)=\frac{{ }^6 C_1}{{ }^{10} C_1}=\frac{6}{10}=\frac{3}{5}\), since there are 6 white balls out of a total of 10 balls.

2. \(P(W \mid X=2)=\frac{{ }^6 C_2}{{ }^{10} C_2}=\frac{15}{45}=\frac{1}{3}\), since there are 15 ways to choose 2 white balls out of 6 , and 45 ways to choose 2 balls out of 10 .

4. \(P(W \mid X=4)=\frac{{ }^6 C_4}{{ }^{10} C_4}=\frac{15}{210}=\frac{1}{14}\), since there are 15 ways to choose 4 white balls out of 6 , and 210 ways to choose 4 balls out of 10 .

5. \(P(W \mid X=5)=\frac{{ }^6 C_5}{{ }^{10} C_5}=\frac{6}{252}=\frac{1}{42}\), since there are 6 ways to choose 5 white balls out of 6 , and 252 ways to choose 5 balls out of 10 .

6. \(P(W \mid X=6)=\frac{{ }^6 C_6}{{ }^{10} C_6}=\frac{1}{210}\), since there are 1 ways to choose 6 white balls out of 6 , and 210 ways to choose 6 balls out of 10 .

Using the law of total probability, we have :

\(

\begin{aligned}

& P(W)=\frac{1}{6}(P(W \mid X=1)+P(W \mid X=2)+P(W \mid X=3)+P(W \mid X=4)+P(W \mid X=5)+P(W \mid X=6)) \\

& P(W)=\frac{1}{6}\left(\frac{3}{5}+\frac{1}{3}+\frac{1}{6}+\frac{1}{14}+\frac{1}{42}+\frac{1}{210}\right)

\end{aligned}

\)

To simplify this expression, find a common denominator :

\(

P(W)=\frac{1}{6}\left(\frac{126}{210}+\frac{70}{210}+\frac{35}{210}+\frac{15}{210}+\frac{5}{210}+\frac{1}{210}\right)

\)

Add the fractions :

\(

P(W)=\frac{1}{6}\left(\frac{126+70+35+15+5+1}{210}\right)=\frac{42}{210}=\frac{1}{5}

\) -

Question 21 of 190

21. Question

A coin is biased so that the head is 3 times as likely to occur as tail. This coin is tossed until a head or three tails occur. If X denotes the number of tosses of the coin, then the mean of X is : [JEE Main 2023 (Online) 13th April Morning Shift]

CorrectIncorrectHint

The given probabilities for getting a head \(( H )\) and a tail \(( T )\) are as follows:

\(

P(H)=\frac{3}{4}, \quad P(T)=\frac{1}{4}

\)

The random variable \(X\) can take the values 1,2 , or 3 . These correspond to the following events:

\(X =1\) : A head is obtained on the first toss. This happens with probability

\(

P(H)=\frac{3}{4}

\)

\(X =2\) : A tail is obtained on the first toss and a head on the second. This happens with probability \(P(T) P(H)=\frac{1}{4} \times \frac{3}{4}\).

\(X = 3\) : Either two tails and then a head are obtained, or three tails are obtained. This happens with probability

\(

P(T) P(T) P(H)+P(T) P(T) P(T)=\left(\frac{1}{4}\right)^2 \times \frac{3}{4}+\left(\frac{1}{4}\right)^3

\)

Now, we calculate the mean (expected value) of \(X\) :

\(

\begin{aligned}

E(X) & =1 \cdot P(X=1)+2 \cdot P(X=2)+3 \cdot P(X=3) \\

& =1 \cdot \frac{3}{4}+2 \cdot\left(\frac{1}{4} \times \frac{3}{4}\right)+3 \cdot\left[\left(\frac{1}{4}\right)^2 \times \frac{3}{4}+\left(\frac{1}{4}\right)^3\right] \\

& =\frac{3}{4}+\frac{3}{8}+3 \cdot\left(\frac{1}{64}+\frac{3}{64}\right) \\

& =\frac{3}{4}+\frac{3}{8}+\frac{3}{16} \\

& =3 \cdot\left(\frac{7}{16}\right) \\

& =\frac{21}{16} .

\end{aligned}

\) -

Question 22 of 190

22. Question

Two dice A and B are rolled. Let the numbers obtained on A and B be \(\alpha\) and \(\beta\) respectively. If the variance of \(\alpha-\beta\) is \(\frac{p}{q}\), where \(p\) and \(q\) are co-prime, then the sum of the positive divisors of \(\)p\(\) is equal to : [JEE Main 2023 (Online) 12th April Morning Shift]

CorrectIncorrectHint

\(

\begin{array}{|c|l|c|}

\hline \alpha-\beta & \text { Case } & P \\

\hline 5 & (6,1) & 1 / 36 \\

\hline 4 & (6,2)(5,1) & 2 / 36 \\

\hline 3 & (6,3)(5,2)(4,1) & 3 / 36 \\

\hline 2 & (6,4)(5,3)(4,3)(3,1) & 4 / 36 \\

\hline 1 & (6,5)(5,4)(4,3)(3,2)(2,1) & 5 / 36 \\

\hline 0 & (6,6)(5,5) \ldots \ldots(1,1) & 6 / 36 \\

\hline-1 & —– & 5 / 36 \\

\hline-2 & —– & 4 / 36 \\

\hline-3 & —– & 3 / 36 \\

\hline-4 & (2,6)(1,5) & 2 / 36 \\

\hline-5 & (1,6) & 1 / 36 \\

\hline

\end{array}

\)

\(

E\left[X^2\right]=\sum_{i=-5}^5\left(x_i\right)^2 P\left(x_i\right)

\)

Substituting the values from table :

\(

E\left[X^2\right]=2\left[\frac{25}{36}+\frac{32}{36}+\frac{27}{36}+\frac{16}{36}+\frac{5}{36}\right]=\frac{105}{18}=\frac{35}{6}

\)

Next, we calculate the expected value of the differences. The expected value is calculated as the sum of the products of each outcome and its corresponding probability. Given that the table is symmetric around 0 , the expected value is 0 .

\(

E[X]=\sum_{i=-5}^5 x_i P\left(x_i\right)=0

\)

Now, we can calculate the variance, which is the expected value of the squared differences minus the square of the expected value of the differences:

\(

\operatorname{Var}[X]=E\left[X^2\right]-(E[X])^2=\frac{35}{6}-0^2=\frac{35}{6}

\)

Here, \(p=35\) and \(q=6\), and they are co-prime.

The positive divisors of 35 are 1, 5, 7, and 35 . The sum of these divisors is \(1+5+7+35=48\) -

Question 23 of 190

23. Question

Let \(S=\left\{M=\left[a_{i j}\right], a_{i j} \in\{0,1,2\}, 1 \leq i, j \leq 2\right\}\) be a sample space and \(A=\{M \in S: M\) is invertible \(\}\) be an event. Then \(P(A)\) is equal to : [JEE Main 2023 (Online) 11th April Morning Shift]

CorrectIncorrectHint

We have, \(S=\left\{M=\left[a_{i j}\right], a_{i j} \in\{0,1,2\}, 1 \leq i, j \leq 2\right\}\)

Let \(M=\left[\begin{array}{ll}a & b \\ c & d\end{array}\right]\), where \(a, b, c, d \in\{0,1,2\}\)

\(

n(s)=3^4=81

\)

If \(A\) is invertible, then \(|A| \neq 0\)

Now, if \(|A|=0\), then \(|M|=0\)

\(

\therefore a d-b c=0 \text { or } a d=b c

\)

Case I: When \(a d=b c=0\), then

There are five ways when \(a d=0\) i.e.,

\(

(a, d)=(0,0),(0,1),(0,2),(1,0),(2,0)

\)

Similarly, there are again five ways, when \(b c=0\)

\(\therefore\) There are total \(5 \times 5=25\) ways, when \(a d=b c=0\)

Case II: When \(a d=b c=1\)

There is only one way, when \(a d=b c=1\)

i.e. \(\quad a=b=c=d=1\)

Case III: When \(a d=b c=2\)

There are two ways, when \(a d=2\), i.e.

\(

(a, d)=(1,2) \text { or }(2,1)

\)

Similarly, there are two ways

when \(b c=2\) i.e., \((b, c)=(1,2)\) or \((2,1)\)

Case IV: When \(a d-b c=4\)

There is only way, when \(a d=b c=4\)

i.e., \(a=b=c=d=2\)

\(\therefore\) Total number of ways, when

\((\bar{A})=\frac{31}{81}|A|=0\) is \(25+1+4+1=31\)

Hence, \(P(A)=1-P(\bar{A})=1-\frac{31}{81}=\frac{50}{81}\) -

Question 24 of 190

24. Question

Let \(N\) denote the sum of the numbers obtained when two dice are rolled. If the probability that \(2^N<N!\) is \(\frac{m}{n}\), where m and n are coprime, then \(4 m-3 n\) is equal to: [JEE Main 2023 (Online) 10th April Morning Shift]

CorrectIncorrectHint

\(N\) denote the sum of the numbers obtained when two dice are rolled.

Such that \(2^N<N\) !

i.e., \(4 \leq N \leq 12\) i.e., \(N \in\{4,5,6, \ldots 12\}\)

Now, \(P(N=2)+P(N=3)=\frac{1}{36}+\frac{2}{36}=\frac{3}{36}=\frac{1}{12}\)

So, required probability \(=1-\frac{1}{12}=\frac{11}{12}=\frac{m}{n}\)

\(

4 m-3 n=4 \times 11-3 \times 12=44-36=8

\) -

Question 25 of 190

25. Question

If the probability that the random variable X takes values \(x\) is given by \(P ( X =x)= k (x+1) 3^{-x}, x=0,1,2,3, \ldots\), where k is a constant, then \(P ( X \geq 2)\) is equal to : [JEE Main 2023 (Online) 8th April Evening Shift]

CorrectIncorrectHint

As, we know that sum of all the probabilities \(=1\)

So, \(\sum_{x=1}^{\infty} P ( X =x)=1\)

\(

\Rightarrow k\left[1+2 \cdot 3^{-1}+3 \cdot 3^{-2}+\ldots \infty\right]=1

\)

Let \(S=1+\frac{2}{3}+\frac{3}{3^2}+\ldots+\infty\)

\(

\Rightarrow \frac{S}{3}=0+\frac{1}{3}+\frac{2}{3^2}+\frac{3}{3^3}+\ldots+\infty

\)

On subtracting, we get

\(

\begin{aligned}

& \frac{2 S}{3}=1+\frac{1}{3}+\frac{1}{3^2}+\ldots+\infty \\

& \Rightarrow \frac{2 S}{3}=\frac{1}{1-\frac{1}{3}}=\frac{1}{\frac{2}{3}} \\

& \Rightarrow \frac{2 S}{3}=\frac{3}{2} \\

& \Rightarrow S=\frac{9}{4}

\end{aligned}

\)

So, \(k \times \frac{9}{4}=1 \Rightarrow k=\frac{4}{9}\)

Now, \(P ( X \geq 2)=1- P ( X <2)\)

\(

\begin{aligned}

& =1- P ( X =0)- P ( X =1) \\

& =1-\frac{4}{9}(1)-\frac{4}{9} \times \frac{2}{3} \\

& =1-\frac{4}{9}-\frac{8}{27}=\frac{27-12-8}{27}=\frac{7}{27}

\end{aligned}

\) -

Question 26 of 190

26. Question

In a bolt factory, machines \(A, B\) and \(C\) manufacture respectively \(20 \%, 30 \%\) and \(50 \%\) of the total bolts. Of their output 3,4 and 2 percent are respectively defective bolts. A bolt is drawn at random from the product. If the bolt drawn is found the defective, then the probability that it is manufactured by the machine \(C\) is : [JEE Main 2023 (Online) 8th April Morning Shift]

CorrectIncorrectHint

Given : \(P(A)=\frac{20}{100}=\frac{2}{10}\)

\(

\begin{aligned}

& P(B)=\frac{30}{100}=\frac{3}{10} \\

& P(C)=\frac{50}{100}=\frac{5}{10}

\end{aligned}

\)

Let \(E \rightarrow\) Event that the bolt is defective.

\(

\begin{aligned}

& \text { So, } P(E / A)=\frac{3}{100} \\

& P\left(\frac{E}{B}\right)=\frac{4}{100}, P\left(\frac{E}{C}\right)=\frac{2}{100} \\

& \text { So, } P ( C / E ) \\

& =\frac{P\left(\frac{E}{C}\right) \times P(C)}{P\left(\frac{E}{A}\right) \times P(A)+P\left(\frac{E}{B}\right) \times P(B)+P\left(\frac{E}{C}\right) \times P(C)} \\

& =\frac{\frac{5}{10} \times \frac{2}{100}}{\frac{3}{100} \times \frac{2}{10}+\frac{4}{100} \times \frac{3}{10}+\frac{2}{100} \times \frac{5}{10}} \\

& =\frac{10}{6+12+10}=\frac{10}{28}=\frac{5}{14}

\end{aligned}

\) -

Question 27 of 190

27. Question

Three dice are rolled. If the probability of getting different numbers on the three dice is \(\frac{p}{q}\), where \(p\) and \(q\) are co-prime, then \(q-p\) is equal to : [JEE Main 2023 (Online) 6th April Evening Shift]

CorrectIncorrectHint

Total number of outcomes \(=6 \times 6 \times 6=216\)

Number of outcomes getting different numbers on the three dice are \({ }^6 P_3=\frac{6!}{3!}=120\)

\(\therefore\) Required probability \(=\frac{120}{216}=\frac{5}{9}\)

\(\therefore p=5\) and \(q=9\)

\(

\therefore q-p=9-5=4

\) -

Question 28 of 190

28. Question

Two dice are thrown independently. Let A be the event that the number appeared on the \(1^{\text {st }}\) die is less than the number appeared on the \(2^{\text {nd }}\) die, B be the event that the number appeared on the \(1^{\text {st }}\) die is even and that on the second die is odd, and C be the event that the number appeared on the \(1^{\text {st }}\) die is odd and that on the \(2^{\text {nd }}\) is even. Then : [JEE Main 2023 (Online) 1st February Evening Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& A=\{(1,2),(1,3),(1,4),(1,5),(1,6),(2,3),(2,4),(2,5),(2,6),(3,4),(3,5),(3,6),(4,5),(4,6),(5,6)\} \\

& n(A)=15 \\

& B=\{(2,1),(2,3),(2,5),(4,1),(4,3),(4,5),(6,1),(6,3),(6,5)\} \\

& n(B)=9 \\

& C=\{(1,2),(1,4),(1,6),(3,2),(3,4),(3,6),(5,2),(5,4),(5,6)\} \\

& n(C)=9 \\

& (4,5) \in A \text { and }(4,5) \in B \\

& \therefore A \text { and } B \text { are not exclusive events } \\

& \quad n((A \cup B) \cap C)=n(A \cap C)+n(B \cap C)-n(A \cap B \cap C) \\

& =3+3-0 \\

& =6

\end{aligned}

\)

Option (d) is correct.

\(

\begin{aligned}

& n(B)=\frac{9}{36}, n(C)=\frac{9}{36}, n(B \cap C)=0 \\

& \Rightarrow n(B) \cdot n(C) \neq n(B \cap C)

\end{aligned}

\)

\(\therefore B\) and \(C\) are not independent. -

Question 29 of 190

29. Question

A bag contains 6 balls. Two balls are drawn from it at random and both are found to be black. The probability that the bag contains at least 5 black balls is : [JEE Main 2023 (Online) 31st January Morning Shift]

CorrectIncorrectHint

Let \(E_i \rightarrow\) Bag have at least \(i\) black balls

\(E \rightarrow 2\) balls are drawn & both black

\(

\begin{aligned}

& \therefore P\left(\frac{E_5 \text { or } E_6}{E}\right)=\frac{P\left(\frac{E}{E_5}\right)+P\left(\frac{E}{E_6}\right)}{\sum_{i=1}^6 P\left(\frac{E}{E_i}\right)} \\

& =\frac{\frac{{ }^5 C_2}{{ }^6 C_2}+\frac{{ }^6 C_2}{{ }^6 C_2}}{0+\frac{{ }^2 C_2}{{ }^6 C_2}+\frac{{ }^3 C_2}{{ }^6 C_2}+\frac{{ }^4 C_2}{{ }^6 C_2}+\frac{{ }^5 C_2}{{ }^6 C_2}+\frac{{ }^6 C_2}{{ }^6 C_2}} \\

& =\frac{10+15}{1+3+6+10+15}=\frac{25}{35}=\frac{5}{7} \\

&

\end{aligned}

\) -

Question 30 of 190

30. Question

If an unbiased die, marked with \(-2,-1,0,1,2,3\) on its faces, is thrown five times, then the probability that the product of the outcomes is positive, is : [JEE Main 2023 (Online) 30th January Morning Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& { }^5 C_0 \times 3^5=243 \\

& { }^5 C_2 \times 2^2 \times 3^3=1080 \\

& { }^5 C_4 \times 2^4 .3=240 \\

& \therefore \text { required probability } \\

& =\frac{243+1080+240}{6 \times 6 \times 6 \times 6 \times 6}=\frac{521}{2592}

\end{aligned}

\) -

Question 31 of 190

31. Question

Let \(S =\left\{w_1, w_2, \ldots \ldots\right\}\) be the sample space associated to a random experiment. Let \(P\left(w_n\right)=\frac{P\left(w_{n-1}\right)}{2}, n \geq 2\). Let \(A=\{2 k+3 l: k, l \in N\}\) and \(B=\left\{w_n: n \in A\right\}\). Then \(P ( B )\) is equal to : [JEE Main 2023 (Online) 29th January Evening Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& P\left(w_1\right)+\frac{P\left(w_1\right)}{2}+\frac{P\left(w_1\right)}{2^2}+\ldots \ldots=1 \\

& \therefore P\left(w_1\right)=\frac{1}{2}

\end{aligned}

\)

Hence, \(P\left(w_n\right)=\frac{1}{2^n}\)

Every number except \(1,2,3,4,6\) is representable in the form \(2 k+3 l\) where \(k, l \in N\).

\(

\begin{aligned}

& \therefore P(B)=1-P\left(w_1\right)-P\left(w_2\right)-P\left(w_3\right)-P\left(w_4\right)-P\left(w_6\right) \\

& =\frac{3}{64}

\end{aligned}

\) -

Question 32 of 190

32. Question

Fifteen football players of a club-team are given 15 T-shirts with their names written on the backside. If the players pick up the T-shirts randomly, then the probability that at least 3 players pick the correct T-shirt is : [JEE Main 2023 (Online) 29th January Morning Shift]

CorrectIncorrectHint

Required probability \(=1-\frac{D_{(15)}+{ }^{15} C_1 \cdot D_{(14)}+{ }^{15} C_2 D_{(13)}}{15!}\)

Taking \(D _{(15)}\) as \(\frac{15!}{e}\)

\(D _{(14)}\) as \(\frac{14!}{e}\)

\(D _{(13)}\) as \(\frac{13!}{e}\)

We get, Required probability \(=1-\left(\frac{\frac{15!}{e}+15 \cdot \frac{144}{e}+\frac{15 \times 14}{2} \times \frac{13!}{e}}{15!}\right)\)

\(

=1-\left(\frac{1}{e}+\frac{1}{e}+\frac{1}{2 e}\right)=1-\frac{5}{2 e} \approx .08

\) -

Question 33 of 190

33. Question

Let \(N\) be the sum of the numbers appeared when two fair dice are rolled and let the probability that \(N-2, \sqrt{3 N}, N+2\) are in geometric progression be \(\frac{k}{48}\). Then the value of \(k\) is : [JEE Main 2023 (Online) 25th January Evening Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& n-2, \sqrt{3 n}, n+2 \rightarrow \text { G.P. } \\

& 3 n=n^2-4 \\

& \Rightarrow n^2-3 n-4=0 \\

& \Rightarrow n=4,-1 \text { (rejected) } \\

& P(S=4)=\frac{3}{36}=\frac{1}{12}=\frac{4}{48} \\

& \therefore k=4

\end{aligned}

\) -

Question 34 of 190

34. Question

Let M be the maximum value of the product of two positive integers when their sum is 66 . Let the sample space \(S=\left\{x \in Z : x(66-x) \geq \frac{5}{9} M\right\}\) and the event \(A=\{x \in S: x\) is a multiple of 3\(\}\). Then \(P(A)\) is equal to : [JEE Main 2023 (Online) 25th January Morning Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& x+y=66 \\

& \frac{x+y}{2} \geq \sqrt{x y} \\

& \Rightarrow 33 \geq \sqrt{x y} \\

& \Rightarrow x y \leq 1089 \\

& \therefore M=1089 \\

& S: x(66-x) \geq \frac{5}{9} \cdot 1089 \\

& 66 x-x^2 \geq 605 \\

& \Rightarrow x^2-66 x+605 \leq 0 \\

& \Rightarrow(x-55)(x-11) \leq 0 ; 11 \leq x \leq 55 \\

& \text { Therefore } S=\{11,12,13 \ldots 55\} \\

&

\end{aligned}

\)

\(

\Rightarrow n(S)=45

\)

Elements of \(S\) which are multiple of 3 are

\(

\begin{aligned}

& 12+(n-1) 3=54 \Rightarrow 3(n-1)=42 \Rightarrow n=15 \\

& n(A)=15 \\

& \Rightarrow P(A)=\frac{15}{45}=\frac{1}{3}

\end{aligned}

\) -

Question 35 of 190

35. Question

Let N denote the number that turns up when a fair die is rolled. If the probability that the system of equations

\(

\begin{aligned}

& x+y+z=1 \\

& 2 x+ N y+2 z=2 \\

& 3 x+3 y+ N z=3

\end{aligned}

\)

has unique solution is \(\frac{k}{6}\), then the sum of value of \(k\) and all possible values of \(N\) is : [JEE Main 2023 (Online) 24th January Morning Shift]CorrectIncorrectHint

For unique solution \(\Delta \neq 0\)

\(

\begin{aligned}

& \text { i.e. }\left|\begin{array}{ccc}

1 & 1 & 1 \\

2 & N & 2 \\

3 & 3 & N

\end{array}\right| \neq 0 \\

& \Rightarrow\left(N^2-6\right)-(2 N-6)+(6-3 N) \neq 0 \\

& \Rightarrow N^2-5 N+6 \neq 0 \\

& \therefore N \neq 2 \text { and } N \neq 3

\end{aligned}

\)

\(\therefore\) Probability of not getting 2 or 3 in a throw of dice \(=\frac{2}{3}\)

As given \(\frac{2}{3}=\frac{k}{6} \Rightarrow k=4\)

\(\therefore\) Required value \(=1+4+5+6+4=20\) -

Question 36 of 190

36. Question

Let \(\Omega\) be the sample space and \(A \subseteq \Omega\) be an event.

Given below are two statements :

(S1) : If \(P ( A )=0\), then \(A =\phi\)

(S2) : If \(P ( A )=1\), then \(A =\Omega\)

Then : [JEE Main 2023 (Online) 24th January Morning Shift]CorrectIncorrectHint

\(\Omega=\) sample space

\(A =\) be an event

\(\Omega=\) A wire of length 1 which starts at point 0 and ends at point 1 on the coordinate axis \(=[0,1]\)

A \(=\left\{\frac{1}{2}\right\}=\) Selecting a point on the wire which is at \(\left\{\frac{1}{2}\right\}\) or 0.5

As wire is an 1-D object so from geometrical probability

\(

P(A)=\frac{\text { Favourable Length }}{\text { Total Length }}

\)

Here total length of wire \(=1\) unit and point has zero length so point \(A\) at \(\left\{\frac{1}{2}\right\}\) or 0.5 has length \(=0\).

\(\therefore\) Favorable length \(=0\)

\(\therefore P ( A )=0\) but \(A \neq \phi\)

Now \(\overline{ A }=[0,1]-\left\{\frac{1}{2}\right\}\)

So, length of \(\bar{A}=\) Length of entire wire – Length of point \(A=1\)

\(

\therefore P (\overline{ A })=1 \text { but } \overline{ A } \neq \Omega

\)

Then both statements are false.Note:

Geometrical probability :

1. For 1-D object, \(P(A)=\frac{\text { Favourable Length }}{\text { Total Length }}\)

2. For 2-D object, \(P(A)=\frac{\text { Favourable Area }}{\text { Total Area }}\)

3. For 2-D object, \(P(A)=\frac{\text { Favourable volume }}{\text { Total volume }}\) -

Question 37 of 190

37. Question

Bag I contains 3 red, 4 black and 3 white balls and Bag II contains 2 red, 5 black and 2 white balls. One ball is transferred from Bag I to Bag II and then a ball is drawn from Bag II. The ball so drawn is found to be black in colour. Then the probability, that the transferred ball is red, is : [JEE Main 2022 (Online) 29th July Evening Shift]

CorrectIncorrectHint

Let \(E \rightarrow\) Ball drawn from Bag II is black.

\(E_R \rightarrow\) Bag I to Bag II red ball transferred.

\(E_B \rightarrow\) Bag I to Bag II black ball transferred.

\(E_w \rightarrow\) Bag I to Bag II white ball transferred.

\(

P\left(E_R / E\right)=\frac{P\left(E / E_R\right) \cdot P\left(E_R\right)}{P\left(E / E_R\right) P\left(E_R\right)+P\left(E / E_B\right) P\left(E_B\right)+P\left(E / E_W\right) P\left(E_W\right)}

\)

Here,

\(

P\left(E_R\right)=3 / 10, \quad P\left(E_B\right)=4 / 10, \quad P\left(E_W\right)=3 / 10

\)

and

\(

\begin{aligned}

& P\left(E / E_R\right)=5 / 10, \quad P\left(E / E_B\right)=6 / 10, \quad P\left(E / E_W\right)=5 / 10 \\

& \therefore \quad P\left(E_R / E\right)=\frac{15 / 100}{15 / 100+24 / 100+15 / 100} \\

& =\frac{15}{54}=\frac{5}{18}

\end{aligned}

\) -

Question 38 of 190

38. Question

Let \(S=\{1,2,3, \ldots, 2022\}\). Then the probability, that a randomly chosen number n from the set S such that \(\operatorname{HCF}( n , 2022)=1\), is : [JEE Main 2022 (Online) 29th July Morning Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& S=\{1,2,3, \ldots \ldots \ldots .2022\} \\

& \operatorname{HCF}( n , 2022)=1 \\

& \Rightarrow n \text { and } 2022 \text { have no common factor } \\

& \text { Total elements }=2022 \\

& 2022=2 \times 3 \times 337

\end{aligned}

\)

M : numbers divisible by 2 .

\(

\{2,4,6, \ldots \ldots . ., 2022\} \quad n(M)=1011

\)

N : numbers divisible by 3 .

\(

\{3,6,9, \ldots \ldots \ldots, 2022\} \quad n(N)=674

\)

L : numbers divisible by 6 .

\(

\begin{aligned}

& \{6,12,18, \ldots \ldots . .2022\} \quad n(L)=337 \\

& n(M \cup N)=n(M)+n(N)-n(L) \\

& =1011+674-337 \\

& =1348

\end{aligned}

\)

\(0=\) Number divisible by 337 but not in \(M \cup N\)

\(

\{337,1685\}

\)

Number divisible by 2, 3 or 337

\(

\begin{aligned}

& =1348+2=1350 \\

& \text { Required probability }=\frac{2022-1350}{2022} \\

& =\frac{672}{2022} \\

& =\frac{112}{337}

\end{aligned}

\) -

Question 39 of 190

39. Question

Let A and B be two events such that \(P(B \mid A)=\frac{2}{5}, P(A \mid B)=\frac{1}{7}\) and \(P(A \cap B)=\frac{1}{9} \cdot\) Consider

(S1) \(P\left(A^{\prime} \cup B\right)=\frac{5}{6}\),

(S2) \(P\left(A^{\prime} \cap B^{\prime}\right)=\frac{1}{18}\)

Then : [JEE Main 2022 (Online) 28th July Evening Shift]CorrectIncorrectHint

\(

\begin{aligned}

&\begin{aligned}

& P(A / B)=\frac{1}{7} \Rightarrow \frac{P(A \cap B)}{P(B)}=\frac{1}{7} \\

& \Rightarrow P(B)=\frac{7}{9} \\

& P(B / A)=\frac{2}{5} \Rightarrow \frac{P(A \cap B)}{P(A)}=\frac{2}{5} \\

& P(A)=\frac{5}{2} \cdot \frac{1}{9}=\frac{5}{18} \\

& S 2: P\left(A^{\prime} \cap B^{\prime}\right)=\frac{1}{18}

\end{aligned}\\

&S 1 \text { : and } P\left(A^{\prime} \cup B\right)=\frac{1}{9}+\frac{6}{9}+\frac{1}{18}=\frac{5}{6} \text {. }

\end{aligned}

\) -

Question 40 of 190

40. Question

Out of \(60 \%\) female and \(40 \%\) male candidates appearing in an exam, \(60 \%\) candidates qualify it. The number of females qualifying the exam is twice the number of males qualifying it. A candidate is randomly chosen from the qualified candidates. The probability, that the chosen candidate is a female, is : [JEE Main 2022 (Online) 28th July Morning Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& P(\text { Female })=\frac{60}{100}=\frac{3}{5} \\

& P(\text { Male })=\frac{2}{5} \\

& P(\text { Female/Qualified })=\frac{40}{60}=\frac{2}{3} \\

& P(\text { Male/qualified })=\frac{20}{60}=\frac{1}{3}

\end{aligned}

\) -

Question 41 of 190

41. Question

A six faced die is biased such that

\(3 \times P (\) a prime number \()=6 \times P (\) a composite number \()=2 \times P (1)\).

Let \(X\) be a random variable that counts the number of times one gets a perfect square on some throws of this die. If the die is thrown twice, then the mean of \(X\) is : [JEE Main 2022 (Online) 27th July Evening Shift]CorrectIncorrectHint

Let \(P (\) a prime number \()=\alpha\)

\(P (\) a composite number \()=\beta\)

and \(P(1)=\gamma\)

\(\because 3 \alpha=6 \beta=2 \gamma=k\) (say)

and \(3 \alpha+2 \beta+\gamma=1\)

\(

\Rightarrow k+\frac{k}{3}+\frac{k}{2}=1 \Rightarrow k=\frac{6}{11}

\)

Mean \(=n p\) where \(n=2\)

and \(p=\) probability of getting perfect square

\(

=P(1)+P(4)=\frac{k}{2}+\frac{k}{6}=\frac{4}{11}

\)

So, mean \(=2 \cdot\left(\frac{4}{11}\right)=\frac{8}{11}\) -

Question 42 of 190

42. Question

Let \(S\) be the sample space of all five digit numbers. It \(p\) is the probability that a randomly selected number from \(S\), is a multiple of 7 but not divisible by 5 , then \(9 p\) is equal to : [JEE Main 2022 (Online) 27th July Morning Shift]

CorrectIncorrectHint

Among the 5 digit numbers,

First number divisible by 7 is 10003 and last is 99995 .

\(

\begin{aligned}

& \Rightarrow \text { Number of numbers divisible by } 7 . \\

& =\frac{99995-10003}{7}+1 \\

& =12857

\end{aligned}

\)

First number divisible by 35 is 10010 and last is 99995.

\(

\begin{aligned}

& \Rightarrow \text { Number of numbers divisible by } 35 \\

& =\frac{99995-10010}{35}+1 \\

& =2572

\end{aligned}

\)

Hence number of number divisible by 7 but not by 5

\(

\begin{aligned}

& =12857-2572 \\

& =10285

\end{aligned}

\)

\(

\begin{aligned}

& 9 P .=\frac{10285}{90000} \times 9 \\

& =1.0285

\end{aligned}

\) -

Question 43 of 190

43. Question

Let \(E _1, E _2, E _3\) be three mutually exclusive events such that \(P \left( E _1\right)=\frac{2+3 p }{6}, P \left( E _2\right)=\frac{2- p }{8}\) and \(P \left( E _3\right)=\frac{1- p }{2}\). If the maximum and minimum values of \(p\) are \(p_1\) and \(p_2\), then \(\left(p_1+p_2\right)\) is equal to : [JEE Main 2022 (Online) 26th July Morning Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& 0 \leq \frac{2+3 P}{6} \leq 1 \Rightarrow P \in\left[-\frac{2}{3}, \frac{4}{3}\right] \\

& 0 \leq \frac{2-P}{8} \leq 1 \Rightarrow P \in[-6,2] \\

& 0 \leq \frac{1-P}{2} \leq 1 \Rightarrow P \in[-1,1] \\

& 0<P\left(E_1\right)+P\left(E_2\right)+P\left(E_3\right) \leq 1 \\

& 0<\frac{13}{12}-\frac{P}{8} \leq 1 \\

& P \in\left[\frac{2}{3}, \frac{26}{3}\right]

\end{aligned}

\)

Taking intersection of all

\(

\begin{aligned}

& P \in\left[\frac{2}{3}, 1\right) \\

& P_1+P_2=\frac{5}{3}

\end{aligned}

\) -

Question 44 of 190

44. Question

If \(A\) and \(B\) are two events such that \(P(A)=\frac{1}{3}, P(B)=\frac{1}{5}\) and \(P(A \cup B)=\frac{1}{2}\), then \(P\left(A \mid B^{\prime}\right)+P\left(B \mid A^{\prime}\right)\) is equal to : [JEE Main 2022 (Online) 25th July Evening Shift]

CorrectIncorrectHint

\(

\begin{aligned}

& P(A)=\frac{1}{3}, P(B)=\frac{1}{5} \text { and } P(A \cup B)=\frac{1}{2} \\

& \therefore P(A \cap B)=\frac{1}{3}+\frac{1}{5}-\frac{1}{2}=\frac{1}{30}

\end{aligned}

\)

Now, \(P\left(A \mid B^{\prime}\right)+P\left(B \mid A^{\prime}\right)=\frac{P\left(A \cap B^{\prime}\right)}{P\left(B^{\prime}\right)}+\frac{P\left(B \cap A^{\prime}\right)}{P\left(A^{\prime}\right)}\)

\(

=\frac{\frac{9}{30}}{\frac{4}{5}}+\frac{\frac{5}{30}}{\frac{2}{3}}=\frac{5}{8}

\) -

Question 45 of 190

45. Question

If the numbers appeared on the two throws of a fair six faced die are \(\alpha\) and \(\beta\), then the probability that \(x^2+\alpha x+\beta>0\), for all \(x \in R\), is : [JEE Main 2022 (Online) 25th July Morning Shift]

CorrectIncorrectHint

For \(x^2+\alpha x+\beta>0 \forall x \in R\) to hold, we should have \(\alpha^2-4 \beta<0\)

If \(\alpha=1, \beta\) can be \(1,2,3,4,5,6\) i.e., 6 choices

If \(\alpha=2, \beta\) can be \(2,3,4,5,6\) i.e., 5 choices

If \(\alpha=3, \beta\) can be \(3,4,5,6\) i.e., 4 choices

If \(\alpha=4, \beta\) can be 5 or 6 i.e., 2 choices

If \(\alpha=6\), No possible value for \(\beta\) i.e., 0 choices

Hence total favourable outcomes

\(

\begin{aligned}

& =6+5+4+2+0+0 \\

& =17

\end{aligned}

\)

Total possible choices for \(\alpha\) and \(\beta=6 \times 6=36\)

Required probability \(=\frac{17}{36}\) -

Question 46 of 190

46. Question

The probability that a relation \(R\) from \(\{x, y\}\) to \(\{x, y\}\) is both symmetric and transitive, is equal to: [JEE Main 2022 (Online) 29th June Evening Shift]

CorrectIncorrectHint

Total number of relations \(=2^{2^2}=2^4=16\)

Relations that are symmetric as well as transitive are

\(

\phi,\{(x, x)\},\{(y, y)\},\{(x, x),(x, y),(y, y),(y, x)\},\{(x, x),(y, y)\}

\)

\(\therefore\) favourable cases \(=5\)

\(

\therefore \quad P_r=\frac{5}{16}

\) -

Question 47 of 190

47. Question

The probability that a randomly chosen \(2 \times 2\) matrix with all the entries from the set of first 10 primes, is singular, is equal to : [JEE Main 2022 (Online) 29th June Morning Shift]

CorrectIncorrectHint

Let M be a \(2 \times 2\) matrix such that \(M =\left[\begin{array}{cc}m & n \\ o & p\end{array}\right]\) and For M to be a singular matrix, \(| M |=0\)

\(

\Rightarrow mp – on =0

\)Case 1: All four elements are equal

\(

\begin{aligned}

& m = n = o = p \\

& \Rightarrow mp – on =0

\end{aligned}

\)

So, number of matrices possible \(=10\)Case 2: When two prime numbers are used

\(\Rightarrow\) Either \(m = n\) and \(o = p\) or \(m = o\) and \(n = p\)

So, number of matrices possible \(={ }^{10} C _2 \times 2!\times 2!\)

\(

\begin{aligned}

& \Rightarrow \frac{10 \times 9}{2} \times 2 \times 2 \\

& =180

\end{aligned}

\)

So, number of matrices possible \(=10+180=190\)

And total number of matrices that can be formed \(=10 \times 10 \times 10 \times 10=10^4\)

So, required probability \(=\frac{190}{10^4}=\frac{19}{10^3}\) -

Question 48 of 190

48. Question

The probability that a randomly chosen one-one function from the set \(\{a, b, c, d\}\) to the set \(\{1,2,3,4,5\}\) satisfies \(f(a)+2 f(b)-f(c)=f(d)\) is : [JEE Main 2022 (Online) 28th June Evening Shift]

CorrectIncorrectHint

Number of one-one function from \(\{a, b, c, d\}\) to set \(\{1,2,3,4,5\}\) is \({ }^5 P_4=120 n(s)\).

The required possible set of value (f(a), \(f(b), f(c), f(d)\) ) such that \(f(a)+2 f(b)-f(c)=f(d)\) are \((5,3,2,1),(5,1,2,3),(4,1,3,5),(3,1,4,5),(5,4,3,2)\) and \((3,4,5,2)\)

\(

\therefore n(E)=6

\)

\(\therefore\) Required probability \(=\frac{n(E)}{n(S)}=\frac{6}{120}=\frac{1}{20}\) -

Question 49 of 190

49. Question

The probability, that in a randomly selected 3-digit number at least two digits are odd, is : [JEE Main 2022 (Online) 28th June Morning Shift]

CorrectIncorrectHint

At least two digits are odd

= exactly two digits are odd + exactly there 3 digits are odd

For exactly three digits are odd

For exactly two digits odd :

If 0 is used then : \(2 \times 5 \times 5=50\)

If 0 is not used then: \({ }^3 C _1 \times 4 \times 5 \times 5=300\)

Required Probability \(=475 / 900\)

\(=19 / 36\) -

Question 50 of 190

50. Question

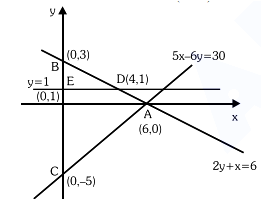

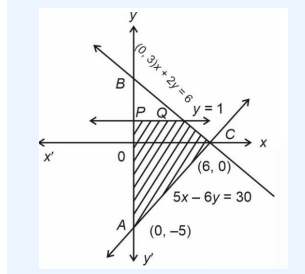

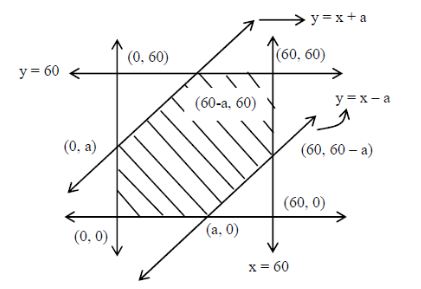

If a point \(A(x, y)\) lies in the region bounded by the \(y\)-axis, straight lines \(2 y+x=6\) and \(5 x-6 y=30\), then the probability that \(y<1\) is : [JEE Main 2022 (Online) 27th June Evening Shift]

CorrectIncorrectHint

\(

\text { Required probability = ar(ADEC)/ar(ABC) }

\)

\(

\begin{aligned}

& =1-\frac{\operatorname{ar}( BDE )}{\operatorname{ar}( ABC )} \\

& =1-\frac{\frac{1}{2} \times 2 \times 4}{\frac{1}{2} \times 8 \times 6}=1-\frac{1}{6}=\frac{5}{6}

\end{aligned}

\)Alternate:

\(

\begin{aligned}

& =\frac{\text { Area of Region } P Q C A P}{\text { Area of Region } A B C A} \\

& =\frac{\frac{1}{2} \times 8 \times 6-\frac{1}{2} \times 2 \times 4}{\frac{1}{2} \times 8 \times 6} \\

& =\frac{5}{6}

\end{aligned}

\) -

Question 51 of 190

51. Question